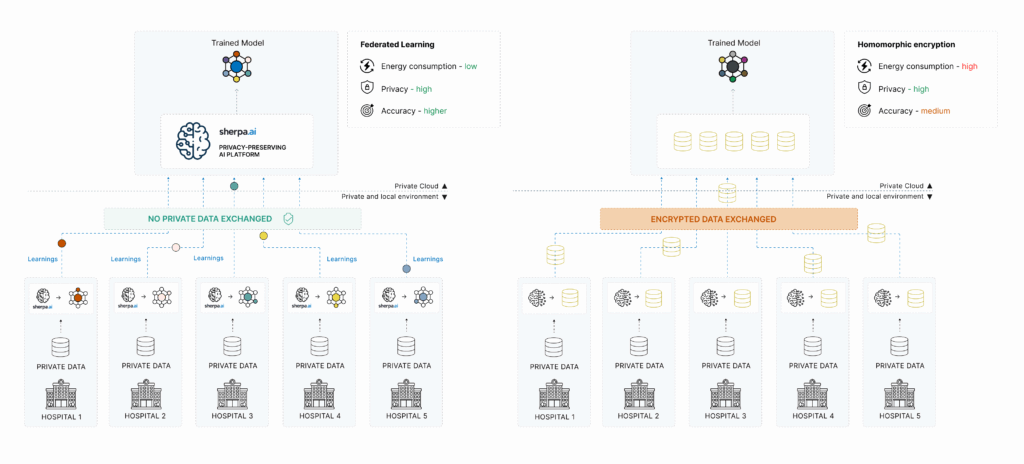

In an era where privacy matters more than ever, protecting data without sacrificing the power of artificial intelligence has become a top priority. To tackle this challenge, several privacy-preserving AI techniques have emerged. Among them, Federated Learning and Homomorphic Encryption stand out as two of the most prominent.

Both enable training models without direct access to raw data. However, their approaches, technological maturity, and real-world applicability differ greatly. In this article, we compare both methods and explain why Federated Learning—particularly our platform—is proving to be the most effective and scalable solution.

What is Homomorphic Encryption?

Homomorphic Encryption is a cryptographic technique that allows computations to be performed on encrypted data without ever decrypting it. It’s a theoretically elegant solution that ensures confidentiality even during data processing.

However, in practice, it presents major limitations:

Extremely high computational cost

Difficulty scaling to complex AI models

Very slow training and inference times

Limited applicability outside of research or very specific calculations

While promising in certain sensitive scenarios (e.g., for encrypted queries), its use in real-world AI model training remains highly constrained.

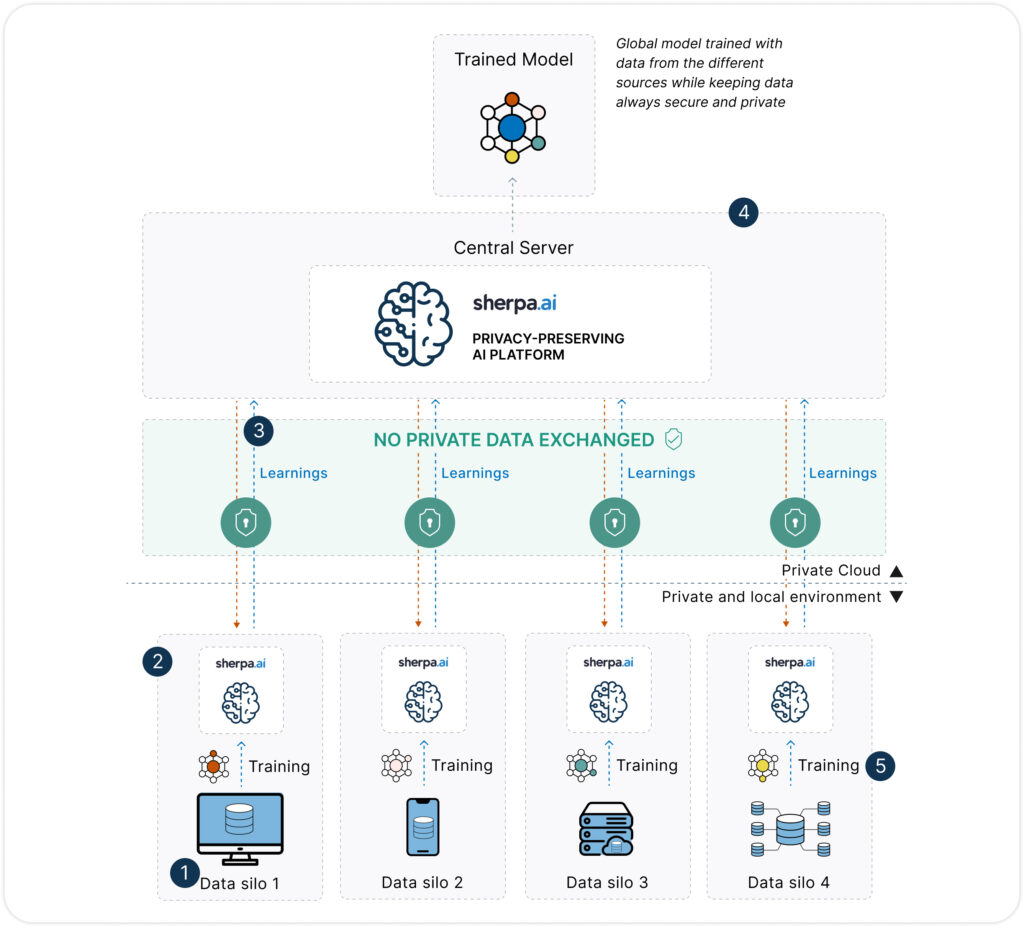

Federated Learning: A Solution by Design

Unlike synthetic data, Federated Learning proposes a fundamental shift: instead of moving data to the model, it brings the model to the data. This allows each organization or device to train a local copy of the model on its own data, sharing only model updates—not the data itself.

Key benefits:

True privacy: Data never leaves its source.

Privacy-by-design compliance with data protection regulations

Higher fidelity: Models are trained on real, up-to-date data, not synthetic replicas

Scalable and adaptable to edge computing, mobile devices, healthcare systems, and sovereign data platforms

Want to learn more? Click here.

Advantages

| Category / Dimension | Our Platform | Homomorphic Encryption |

|---|---|---|

| Privacy | ||

| Data privacy | ✅ High: data is never shared—only model updates are exchanged. | ✅ High: data remains encrypted even during computation. |

| Preprocessing (PII anonymization) | ✅ Not required: model trains directly at the data source. | ❌ Required: robust encryption and key management needed. |

| Regulatory compliance (GDPR, HIPAA) | ✅ Strong: aligns with data protection laws by avoiding data transfer. | ⚠️ Potentially strong: depends on encryption scheme and proper key handling. |

| Data control | ✅ Data remains under full control of the source organization. | ⚠️ Data is opaque to the system but operational control over encrypted processing is lost. |

| Performance | ||

| Data transmission | ✅ No: data remains in place. | ⚠️ Limited: encrypted data can be transferred, but remains inaccessible. |

| Energy consumption | ✅ Efficient: distributed training reduces unnecessary resource usage. | ❌ Very high: computation on encrypted data is extremely resource-intensive. |

| Training time | ✅ Reasonable: training is parallel and local, orchestrated by Sherpa.ai. | ⚠️ Very slow: encrypted operations can be thousands of times more expensive. |

| Model quality | ✅ High: trained on real, updated data. | ⚠️ Limited: constrained to simple models due to compute costs. |

| Scalability | ||

| Implementation cost | ✅ Low to moderate: no need for data duplication or complex legal setups. | ❌ High: requires advanced cryptography, computing power, and specialists. |

| Scalability | ✅ High: proven in real-world settings with thousands of nodes (banking, health, defense…). | ⚠️ Limited: hard to apply at scale due to compute demands. |

| Integration with existing systems | ✅ Flexible: open API and easy integration with current infrastructure. | ⚠️ Complex: requires adaptation to work with encrypted data at application level. |

| Governance | ||

| Auditability and traceability | ✅ High: each node controls its data and model contributions can be audited. | ⚠️ Weak: difficult to audit encrypted data processing and link it to original sources. |

| Participant control | ✅ Full: organizations retain complete control of their data, nodes, and policies. | ⚠️ Partial: depends on key manager and compute environment. |

GDPR Compliance and Risks of Homomorphic Encryption

One of the main goals of technologies like Federated Learning and Homomorphic Encryption is to ensure compliance with privacy regulations—especially the General Data Protection Regulation (GDPR) in Europe. However, their risk profiles differ significantly.

Federated Learning: strong, verifiable compliance

Our Federated Learning approach naturally aligns with GDPR principles:

Data minimization: no unnecessary data movement or replication

Purpose limitation: data is used only for specific tasks in a controlled environment

Accountability and traceability: each participant retains control over its data and node

Privacy by design: the system never requires access to raw data

This significantly reduces legal exposure and simplifies audit processes and DPIAs (Data Protection Impact Assessments).

Homomorphic Encryption: strong protection with new uncertainties

Although homomorphic encryption ensures complete confidentiality during processing, it doesn’t eliminate regulatory risks:

Key management becomes a critical risk: if private keys are compromised, encrypted data can be exposed.

Encrypted personal data is still considered processing under GDPR, even if unreadable.

Auditing is difficult: it’s hard to prove how encrypted data was processed without decrypting.

Risk of re-identification: if decrypted outside of a controlled environment, original privacy risks resurface.

Moreover, relying solely on encryption doesn’t guarantee data minimization or distributed governance, both of which are well addressed by Federated Learning.

Why Is the Better Fit

While both Federated Learning and Homomorphic Encryption aim to protect data privacy, only Federated Learning offers a practical, scalable, and production-ready solution for the real world.

1. Production-ready today

Unlike homomorphic encryption—still hindered by computational cost and technical complexity—Federated Learning is already used in real-world projects across healthcare, banking, mobility, and defense. Our platform has been successfully deployed in complex environments, orchestrating thousands of training nodes with regulatory compliance and operational efficiency.

2. Privacy without sacrificing performance

Federated Learning allows models to be trained on real, complete, and up-to-date data—without moving or duplicating sensitive information. This results in higher model quality than purely cryptographic methods, while still protecting privacy.

3. Naturally compliant with regulations

Because data always stays under the owner’s control and is never transferred, Federated Learning aligns seamlessly with GDPR, HIPAA, and other frameworks. It can also incorporate differential privacy, secure aggregation, or even partial encryption to enhance protection.

4. Scalable, flexible, and adaptable

From mobile devices to hospitals and defense systems, Federated Learning adapts to diverse environments without redesigning infrastructure. Our platform provides a versatile platform with open APIs, cloud/edge/on-premise deployment, and seamless integration with existing workflows.

The Most Advanced Platform in Europe

In this context, Sherpa.ai has developed the most advanced Federated Learning platform in Europe, specifically designed to address privacy, security, and regulatory challenges in demanding sectors.

What makes Sherpa.ai’s platform unique?

Built-in differential privacy: adds mathematical noise to model updates to prevent data reconstruction

Full client control: data never leaves the client’s environment; can be deployed on-premise or in private cloud

Auditing and traceability: includes tools to demonstrate GDPR and AI Act compliance

High interoperability and performance: supports popular AI frameworks (TensorFlow, PyTorch), advanced node orchestration, and efficient communication

Proven in production: used by banks, hospitals, telcos, and public institutions extracting value from data without compromising privacy

In a world increasingly shaped by privacy regulations and public trust, training AI models without compromising sensitive data is a strategic imperative. Both Federated Learning and Homomorphic Encryption offer innovative answers, but with vastly different levels of practicality and maturity.

While homomorphic encryption offers strong theoretical guarantees, its high computational cost and limited applicability make it unrealistic for many real-world use cases. In contrast, Federated Learning—especially via Sherpa.ai’s platform—has proven to be a practical, scalable, and regulation-ready solution.

By allowing organizations to train AI models directly where data resides, without moving or exposing it, Federated Learning enables ethical, secure, and high-performing artificial intelligence.

We are leading this transformation—empowering organizations with technology that protects privacy while enabling a more responsible, interoperable, and future-ready AI.